If you’ve spent any time on tech circles or in an AI Slack lately, you’ve probably heard the word agent thrown around like confetti. Everyone seems to have one. Some claim their agent can schedule meetings, others say theirs can run your cloud, and a few insist that agents will soon replace entire teams. Yet when you ask what an AI agent actually is, the answers get vague fast.

TL;DR: An AI agent is a system that uses a model to reason, tools to act, and feedback to learn turning human intent into autonomous execution.

At its core, an agent is software that doesn’t just respond to you it acts on your behalf. That single distinction is what separates today’s chatbots from tomorrow’s autonomous systems. A chatbot answers questions; an agent interprets a goal and figures out how to achieve it. Tell a chatbot, “Find me flights to London,” and it lists prices. Tell an agent, and it compares options, books the ticket, and emails you the receipt. The agent is no longer a tool you query; it’s a worker you delegate to.

From Model to Agent: How Autonomy Emerges

Most of what we call “AI” right now still revolves around large language models (LLMs). These models are brilliant pattern matchers: they take a prompt, predict the next word, and repeat that prediction until they’ve produced a useful response. But they stop there. They don’t persist memory, they don’t touch real systems, and they don’t decide what to do next.

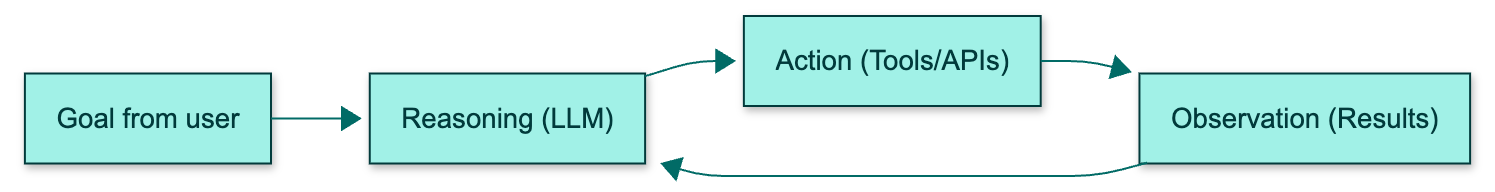

An agent wraps a model in a scaffolding that adds those missing pieces. The model becomes the reasoning core, but around it sit memory, tools, and control logic. Together they create a feedback loop that lets the system think, act, observe the outcome, and adjust. It’s the difference between asking a friend for advice and giving them your credit card.

What’s Inside an AI Agent: Memory, Tools, and Logic

Think of an AI agent as a small ecosystem. The brain is the large language model that performs reasoning. The memory both short-term context and long-term storage lets it remember what’s happening and recall what has happened before. The tools are how it touches the outside world: APIs, databases, scripts, or even browsers. The environment defines where it runs, whether that’s your laptop, a cloud container, or a controlled sandbox. Overseeing everything is an orchestrator that decides which action to take next and when to stop.

Each part matters because autonomy without constraint quickly becomes chaos. Memory without orchestration leads to AI agents that never shut up; orchestration without memory produces ones that forget what they were doing mid-task.

Why AI Agents Matter for Automation and Efficiency

Agents represent a shift from explicit programming to adaptive automation. Traditional software executes instructions exactly as written; agents infer what you want and improvise. That flexibility is why they’ve exploded across industries. A customer-support agent can now read a ticket, check order status, issue a refund, and write a summary for the CRM. A DevOps agent can monitor cloud costs and automatically right-size compute. These aren’t science-fiction demos anymore; they're early production experiments running inside large organizations.

The excitement is justified, but it hides a practical truth: the more capable the agent, the more dangerous its mistakes become. When an agent misreads intent, it doesn’t just give a wrong answer; it can trigger a workflow, modify a record, or deploy code to production. In other words, the margin for error widens as autonomy increases.

The Messy Reality of Building AI Agents

Anyone who has built one knows agents are fragile creatures. They loop endlessly when goals are vague. They hallucinate facts, misuse tools, or forget constraints. Give them permission to run code and they will, sometimes in ways you didn’t expect. Security researchers have already shown that carefully crafted prompts can convince an agent to leak data or execute malicious commands. The flaw isn’t malevolence; it’s a lack of guardrails. We’ve handed probabilistic reasoning engines the keys to deterministic systems.

Infrastructure Is the Safety Net: Securing AI Agents

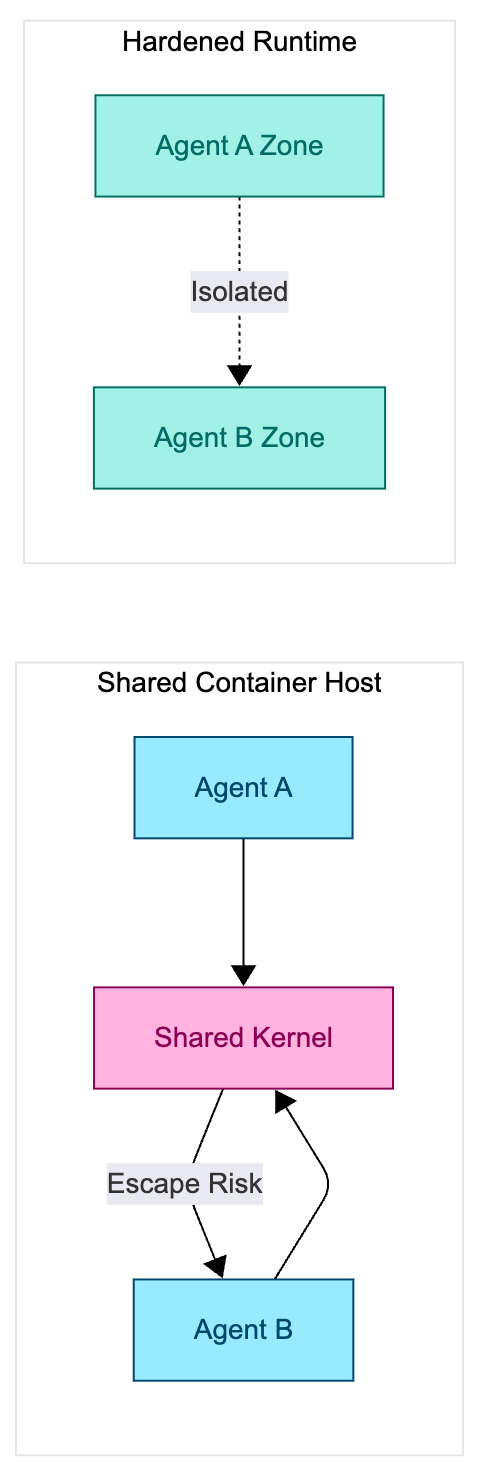

Because agents can execute code and access resources, they blur the line between application and infrastructure. Each one is, in effect, a tiny runtime. When something goes wrong, it doesn’t just crash an app; it can compromise a system. That’s why containment has become the next frontier of agent design.

Most frameworks rely on Linux containers for isolation, but containers were built for efficiency, not security. They share kernels and devices, which means a single exploit can cross boundaries. A hardened runtime like Edera changes this dynamic. It operates as an execution layer designed with a "assume breach" security model, enforcing strict isolation. Each agent runs in its own sealed environment, eliminating the conditions that enable privilege escalation and data leakage.

This is where the discipline of infrastructure security intersects with the creativity of AI development. The more autonomous the system, the more critical its boundaries become. Prevention has to move into the execution layer itself; detection alone can’t keep up with a process that rewrites its own instructions.

The Future of AI Agents and Infrastructure Security

We’re still early in the agent era, and the landscape is shifting weekly. Frameworks like AutoGen and CrewAI are experimenting with multi-agent collaboration. Companies are exploring “agent marketplaces” where specialized agents trade skills or data. Standards such as the Model Context Protocol (MCP) aim to make different agents interoperable while preserving control. And regulators are beginning to ask what accountability looks like when a line of reasoning, not a human, triggers an action that costs money or exposes data.

The trajectory feels familiar: first excitement, then complexity, then consolidation. We saw it with web apps, cloud, and containers. Agents are simply the next iteration systems that don’t just scale compute but scale decision-making.

So…What Is an AI Agent, Really?

An AI agent is a piece of software that uses a model to reason, memory to remember, tools to act, and feedback to learn. It’s a system that turns intent into execution. The idea sounds simple until you realize that execution means running real code, touching real systems, and creating real risk.

If the last decade of infrastructure taught us anything, it’s that flexibility without security is a ticking clock. Agents will only reach their potential when the environments they run in are as resilient as the models that power them. Hardened runtimes, verified isolation, and clear operational boundaries aren’t the unglamorous plumbing behind the revolution; they're what will make it sustainable.

The next time someone tells you their platform runs on “AI agents,” ask them a simple question: where do those agents live, and how safe are their homes?

FAQs

What is an AI agent?

An AI agent is software that uses a model to reason, tools to act, and feedback to learn—turning human goals into autonomous execution.

How are AI agents different from chatbots?

Chatbots respond to input; AI agents take action. Agents interpret goals and use tools or APIs to complete tasks autonomously.

Why does AI agent security matter?

Because agents can execute real code and access systems, isolation and runtime security are critical to prevent unintended or malicious actions.

How does Edera help secure AI agents?

Edera provides a hardened runtime that isolates every agent in its own environment, ensuring safe, controlled execution.

“WTF is...?” series

- What the F*ck Is Paravirtualization?

- What the F*ck Is A Zone? Secure Container Isolation with Edera

- What the F*ck Is Container Isolation? Security in Kubernetes & Beyond

- What the F*ck Is a Hardened Runtime? The Future of Container Security

- What The F*ck Is Multitenancy? Secure & Efficient Containers Explained

- More coming soon...